Give people control over AI systems that impact their lives

While government AI systems have the potential to improve things like efficient delivery of services to citizens, they can also harm people’s autonomy. So, how can public AI systems be designed to ensure citizens maintain control over their lives? Through his PhD research, Kars Alfrink says giving citizens a say in when, how, and for what purposes these systems are developed and used is vital.

From the beginning of his PhD, Alfrink wanted to focus his research on ‘smart city’ systems, the use of technology to make things in the city work more efficiently and effectively. Typically developed by public/private partnerships, these sometimes complicated systems may not be understood by citizens. From the perspective of the government and its suppliers that want people to accept the systems, increasing transparency should lead to greater trust in AI systems.

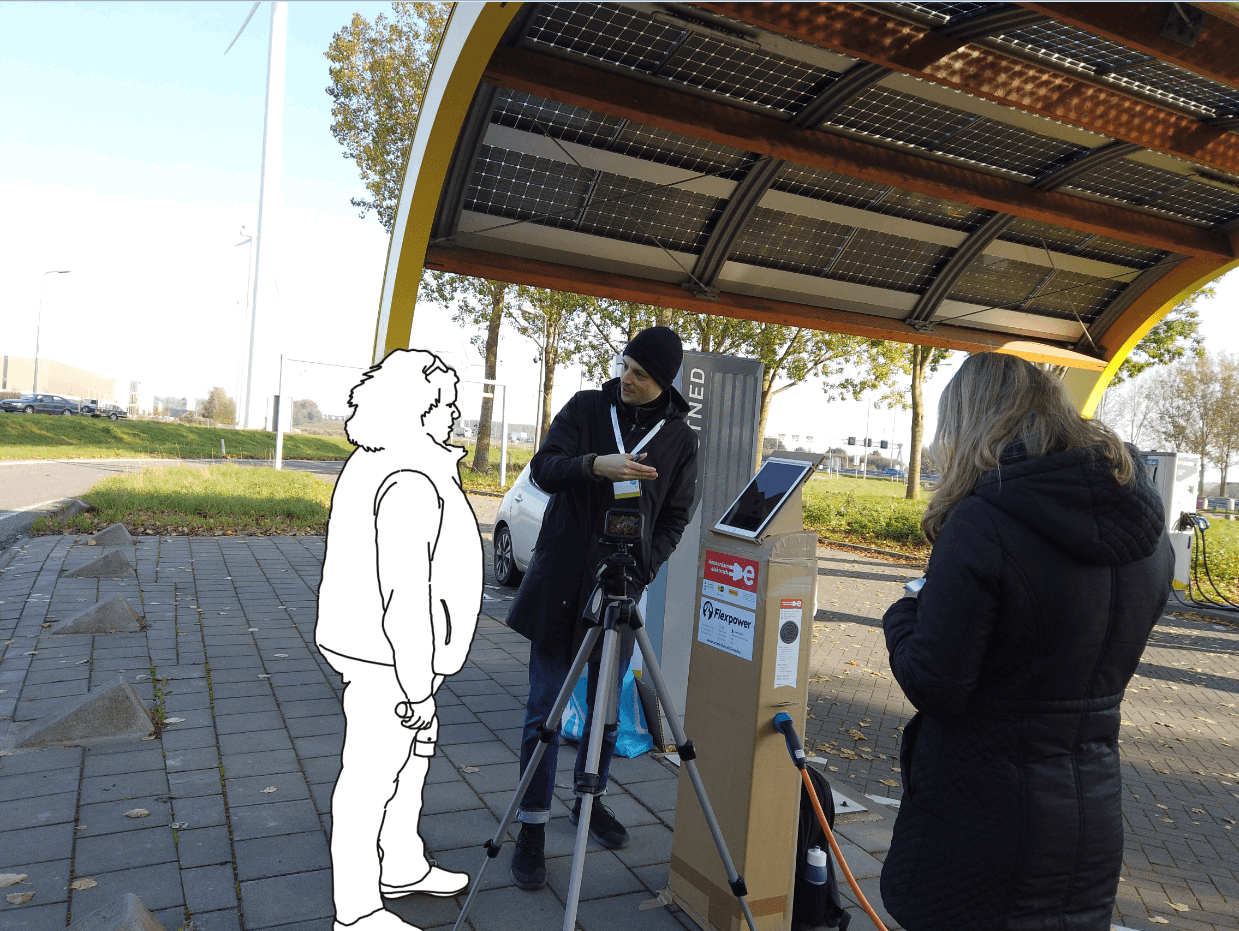

Working with the city of Amsterdam, Kars observed the issue of transparency related to smart electric vehicle (EV) charging stations. They vary their charging speed based on various factors such as electrical grid capacity and the availability of renewable energy, and people may need help understanding why that is the case. Using an alternative prototype charging station with a display to explain the variations in charging speed, he set out to observe how people think about transparency. “One of the main things that came out of this study was that even though the design succeeded at providing information, people were not impressed because there was no opportunity to change anything about it if they wanted to,” says Kars. The insights from this work led to the core theme of his thesis, what he calls contestability.

Designing for contestability

In addition to EV charging stations, Kars also looked at other public AI systems, such as camera cars or scan cars, mainly used to monitor parking by scanning licence plates. This technology can also detect other types of objects in public spaces that are relevant to public administrators such as garbage, broken street lights, or bulky waste containers. Because of his interest in conflict and how to deal with it constructively, Kars began to focus on the issue of contestability, referring to the ability to oppose or object to something. In prior research, he notes that contestability was already identified as a desirable quality in AI systems. Still, little work has focused on translating these theories into knowledge usable by AI system designers.

Building on that, his work centred on enabling citizens to maintain some control of public AI systems, such as camera cars, that are used to decide things about them. “If people disagree with a decision for whatever reason, whether it’s a mistake or maybe they see things differently, they should have the ability to oppose, appeal, or ask questions about such a decision,” he says. “And so, in that sense, they should have control.”

But even further, Kars says that citizens should also be involved in developing these systems, giving them a real say in how they should work. Ultimately, he says that public AI systems should be deliberately designed to be contestable, making them responsive to human intervention throughout the system lifecycle.

Right to autonomy

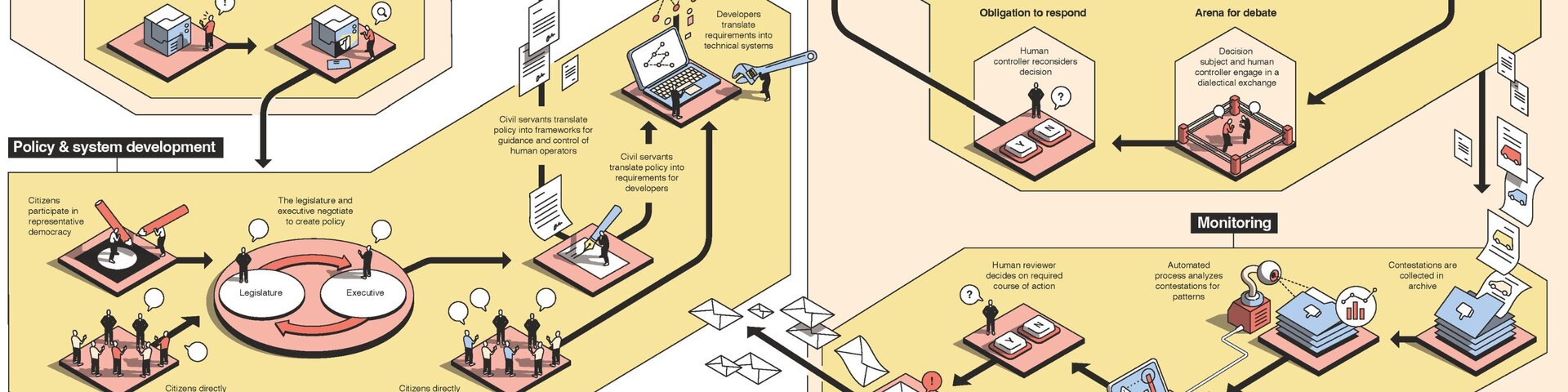

After a systematic literature review, Alfrink constructed a framework describing elements that can be added to systems and actions that system developers can take to make AI systems more contestable. He then connected these elements and actions to groups of people commonly found in AI systems. For example, one such element is a means for people impacted by decisions to appeal the decisions. The actions were then connected to the stages a system commonly passes through during development. For example, conflictual forms of citizen participation are connected to the design stage.

Alfrink then worked with an information designer to translate these into a visual explanation that designers can use as a tool. This practical guidance answers the overarching question of Kars’ thesis about the potential harm public AI systems cause to human autonomy. He writes: ‘Transparency is essential for contestability, but alone, it is not enough. To increase the contestability of systems locally and globally, we need to implement system features and development practices that promote it, both before and after the fact’.

From a pragmatic perspective, Kars explains that contestability is a way for governments to improve the quality of systems continuously. “A more normative argument is that by ensuring systems are contestable, you’re respecting people’s autonomy,” he says. Quoting a recent report from the Netherlands Scientific Council for Government Policy, Kars says: “People who experience control over life are usually happier and healthier, live longer, and are also more positive about society as a whole.” And with the right to autonomy being enshrined in various human rights bills, he says designing for contestability is a practical way to ensure respect for autonomy and AI systems.

Collaborative journey

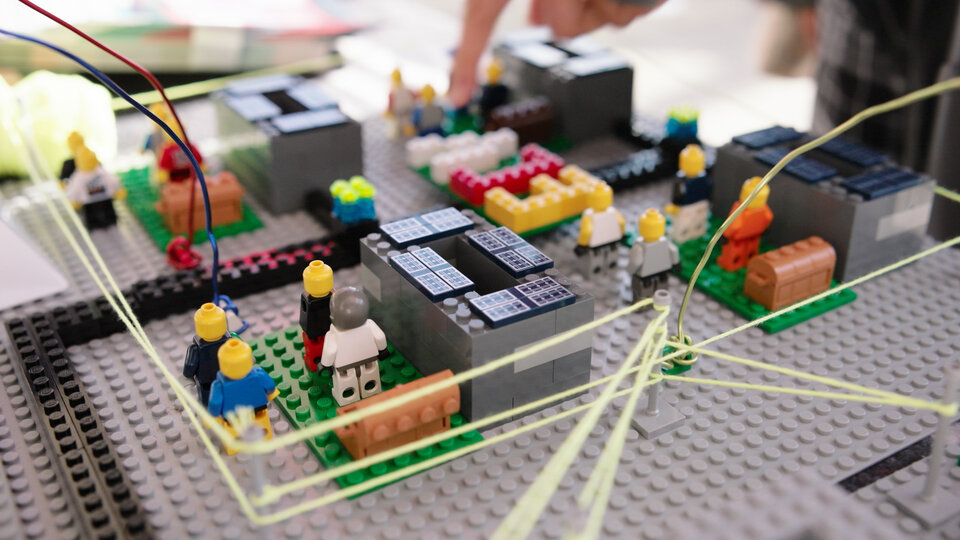

Having previously worked in the design field for over 15 years, Kars notes that it was quite a shift to go from a very collaborative industry practice to a PhD, which often feels like an individual enterprise. For that reason, he thinks it’s important to recognise the collective aspects of his PhD project. He worked closely with many people, including designers, civil servants, and creative professionals, and received support with networking and funding from the AMS Institute. “It was an interesting journey for me, and it was nice to gradually collaborate more and more as part of the research.” Kars is currently a postdoctoral researcher in the IDE faculty’s Knowledge and Intelligence Design section.

Ianus Keller

- +31 (0)6 22512224

- a.i.keller@tudelft.nl

-

Room B-2-280 StudioDream

Present on: Mon-Wed-Thu