Just like the roads that provide access to them and the dikes that protect them, cloud datacentres have become an essential part of our national, and worldwide, infrastructure. Thanks to capacity planning research by TU Delft master’s student Georgios Andreadis, these datacentres may continue to meet the ever-growing computational demands while reducing their operational costs and increasing their efficiency and environmental sustainability.

‘When I tell people about my research into computers, rather than with computers, they often meet me with interest, but also with disbelief,’ Georgios Andreadis says. ‘Most people take computers for granted as machines that just work, but there are plenty of things to explore that are non-trivial.’ It was a first-year’s course on Computer Organisation that sparked Andreadis’s interest into the architecture of computer systems and how to manage them efficiently. It eventually led him to pursue his master’s degree in the @Large Research group of Alexandru Iosup, professor at Vrije Universiteit Amsterdam and, previously, the lecturer for that course. His group is specialised in computer systems for which the resources, users, or workloads are massive in nature.

Spreadsheets and rule-of-thumbing

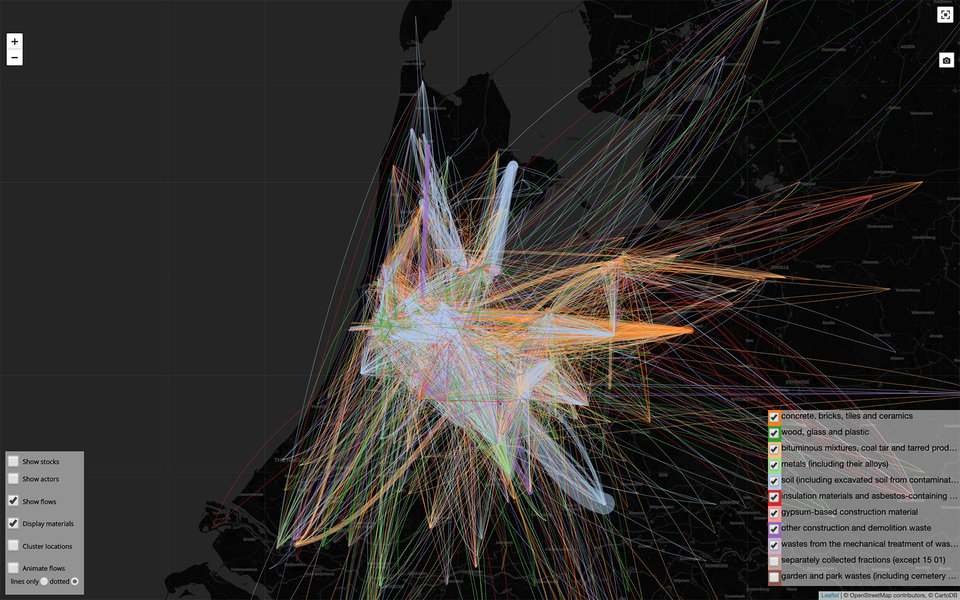

‘Cloud datacentres offer banks, healthcare, governments, emergency services, and many small businesses a safe and secure environment for storing their data and running their digital services,’ Iosup says. ‘Just like roads and dikes, they have become an essential part of our infrastructure. At any one time, we need to have sufficient capacity, lest society grinds to a halt. Imagine what would happen if people cannot transfer money for a couple of days.’ Until now, cloud datacentres have ensured the quality of their service by purchasing and installing plenty of excess computing power and data storage. Iosup: ‘Cloud datacentres already account for about two percent of the total world energy use, and it is growing. Simply buying more servers is not just unsustainable, climate-wise it is becoming irresponsible.’ Surprisingly, and unlike for roads and dikes, capacity planning for datacentres is still misunderstood and under-studied. Andreadis: ‘Whereas large players like Google and Amazon have certainly developed their own in-house approach, tens of thousands of small to medium centres basically rely on spreadsheets and rule-of-thumbing. In my thesis I tried to make an argument for basing capacity planning decisions on a much more rigorous, quantitative analysis.’

No firm ground

The end result of his thesis is a tool, named Capelin after a species of fish that plays an essential role in the food-chain of whales and many kinds of fish and seabirds. Iosup: ‘We think that our tool is food for thought for datacentres. Also, we wanted a name that could stand the test of time and the word has some similarity, in sound, to capacity planning.’ Capelin assists capacity planners in deciding what hardware to purchase and install, to support a certain mix of computational requests by their customers. As is often the case in science and engineering, the first step towards a solution was to clearly specify the problem. Andreadis combined a systematic literature review with in-person interviews at commercial and scientific datacentres. ‘There were only few existing studies on this topic,’ he says. ‘I was even more surprised when the interviews showed that people don’t use what is described in the literature. It turned out that the published models were not realistic enough or only applicable to a very specific scenario.’ So, there was very little firm ground to stand on, for both the capacity planners and Andreadis. ‘That made it difficult for me as any guidance needed to come from my own ideas and conversations with my supervisors. On the other hand, being less constrained by what others had done gave me much more freedom to explore.’

Surprisingly, capacity planning for datacentres is still misunderstood and under-studied.

Simulations are a planner’s best friend

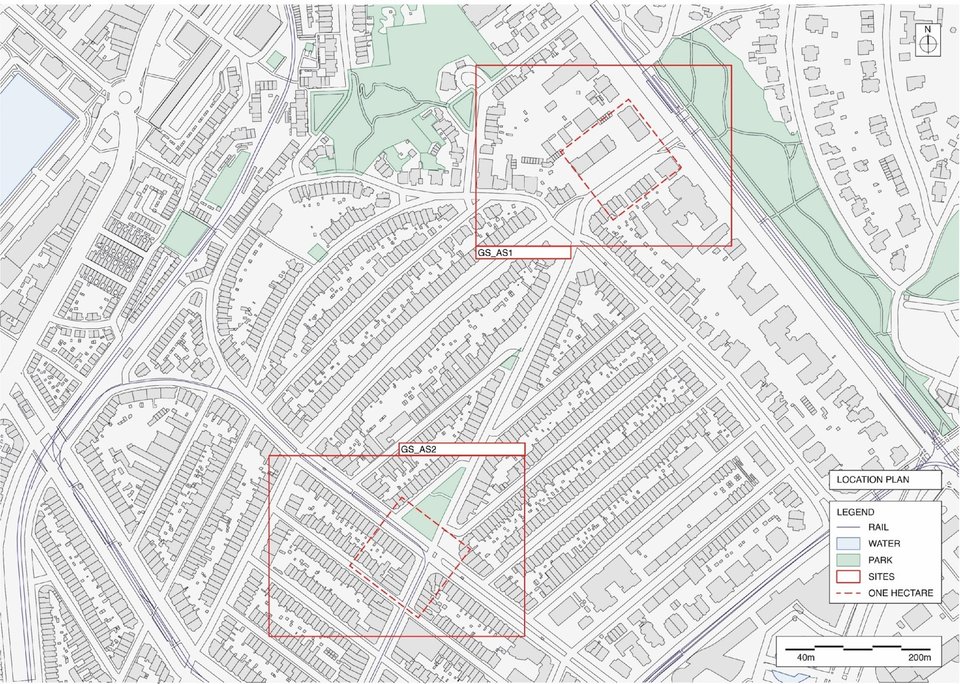

‘During my interviews, the idea of what-if scenarios really resonated with practitioners as it would create a better understanding of possible future outcomes, using factors such as the timing of deployments or changes in the computing power requested by their customers,’ Andreadis says. ‘This what-if methodology has become the central part of Capelin and helps them express and answer questions.’ It means that the capacity planner first designs a possible future layout of the datacentre – for example adding lots of cheap servers, or only a few very powerful ones. The planner then selects a portfolio of what-if scenarios, either suggested by Capelin or created by themselves. A scenario would for example be that the computing power requested by their customers increases by ten percent, as compared to the first layout, or that half of the datacentre goes down due to a large-scale failure. Finally, Capelin simulates the performance of the new datacentre layout for all these scenarios. The capacity planner can then make changes to the layout and repeat the simulation process. ‘You can’t test such scenarios in a real-world datacentre,’ Andreadis says. ‘Simulations are an ideal tool to figure out consequences before you make any decisions about capacity.’

Success and failure are severely underspecified when it comes to capacity planning for cloud datacentres.

Failure and success

A major difficulty was that, for Capelin to report on the performance of the intended layout, it needed to have a good definition of success and failure. ‘These are severely underspecified within the field of capacity planning for cloud datacentres,’ Andreadis says. ‘I had anticipated this, but it really crystallised during the interviews.’ It is the many factors that play a role in capacity planning – such as timing of an upgrade and available personnel – that make it difficult to attribute a failure to the planned hardware layout. Some failures go by unnoticed, such as servers being used slightly less than normal. Others are very visible when, for example, the online banking system stops allowing money transfers for a few hours. On the other hand, it is also very difficult to do demonstrably well as a capacity planner. A datacentre may be more energy efficient but at a higher investment cost, or it may be more performant but also more prone to failures. Andreadis: ‘Until our simulator came around it was hard to express the exact merits and value of one single planning decision. Now we can evaluate the trade-off between energy use, investment and performance.’

Simply buying more servers is not just unsustainable, climate-wise it is becoming irresponsible.

Better than reasonable

After building and carefully validating a prototype of Capelin, Andreadis ran a number of experiments based on anonymized data from real-world cloud datacentres. These showed that it is certainly possible to improve on capacity planning choices that seem reasonable and common in practice. Capelin-based optimisation could, for example, reduce energy consumption by a factor two while improving performance. An additional merit of Capelin is that it also helps to streamline the process of capacity planning itself. ‘Capacity planning is not just about the hardware,’ Andreadis says. ‘It is a multi-dimensional problem also involving personnel aspects, timing of the extension, and other business factors that are hard to include in a model.’ Typically, a group of key personnel, having various roles within the datacentre company, meets over a period of months before a decision is made. ‘Rather than having to wait until a next meeting for some excel-based estimates, our tool provides quantitative answers within minutes, even in realistic, complex situations. It can be used live in meetings, reducing the time and personnel needed to come to a definitive decision, while also increasing the confidence in that decision.’ It is for this reason that many organizations, from industry to scientific institutions, have already shown interest in integrating Capelin into their capacity planning process once it is released.

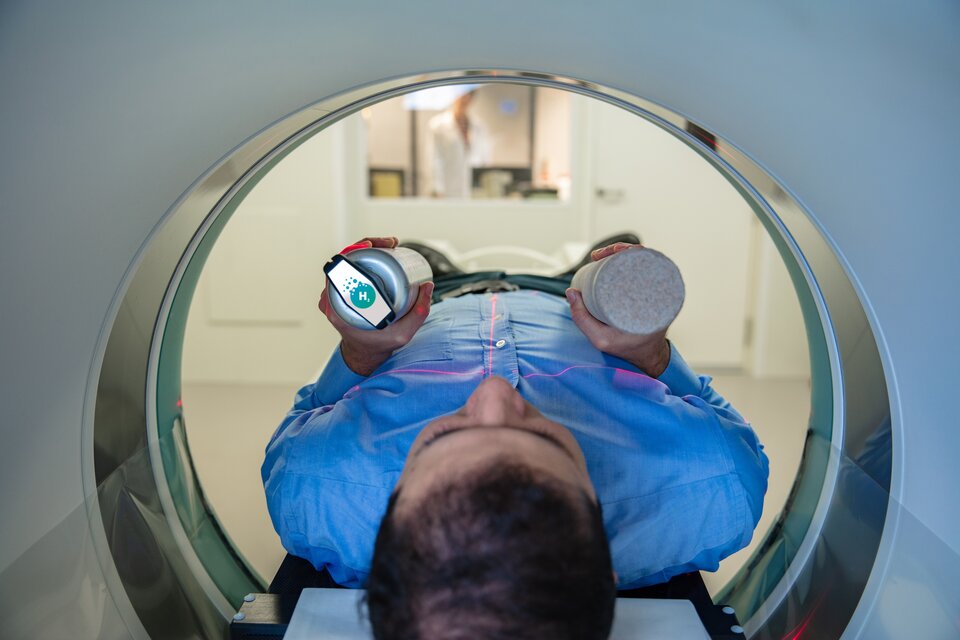

Next to finishing his master’s thesis, Andreadis spent part of the summer as a volunteer for the Red Cross – testing people for COVID-19 as they were about to enter the hospital – and providing first aid as a lifeguard at the beaches of The Hague. He was also a volunteer programmer at We\Visit, the online platform developed at TU Delft that allows intensive care patients to connect with their relatives through video calls. He wasn’t yet on the lookout for a job when his eyes fell on a PhD position at Leiden University Medical Center in collaboration with the Centrum Wiskunde & Informatica in Amsterdam. ‘Starting in November, I will develop so-called evolutionary computer algorithms to model tumour growth based on multiple time-separated MRI-scans. It is the perfect combination of a scientific challenge and bringing my passion for healthcare into my daily job.’ His colleagues and supervisors in the @Large Research group, who provided essential support to Andreadis during his master’s research, will miss him. ‘He has a rare talent for both science and leadership. I expect great things from him,’ his supervisor Iosup says. ‘It is a loss to have someone so good leaving, but once the corona epidemic is over, he will still be joining the team events and parties.’

Text: Merel Engelsman | Photography: Georgios Andreadis