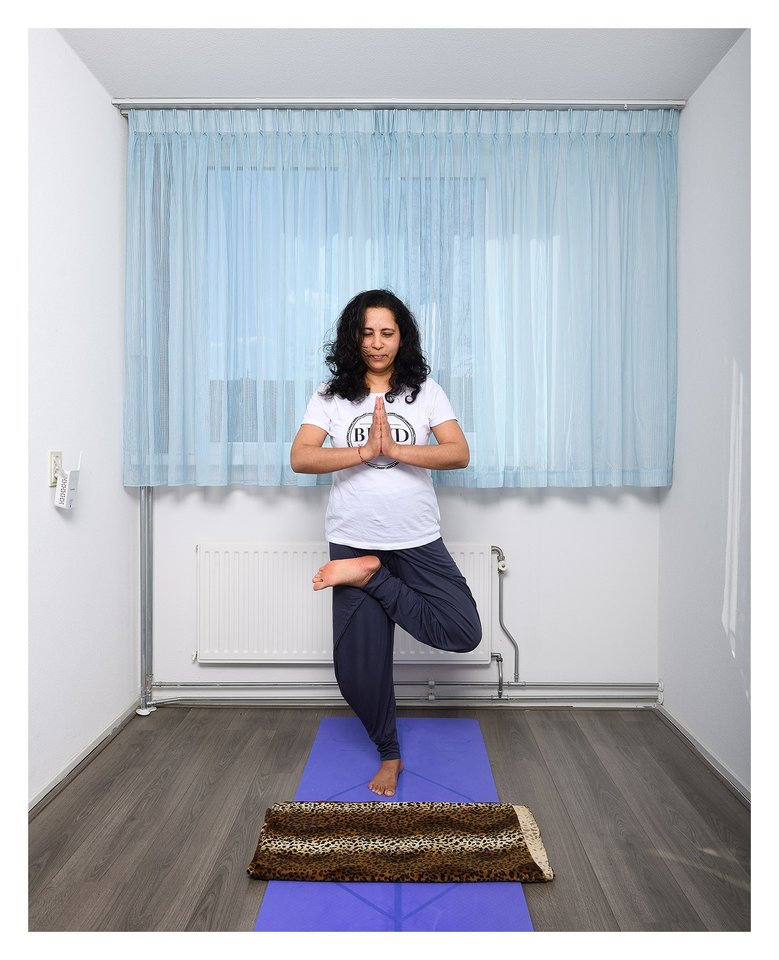

He came to the Netherlands on New Year's Eve 2013, was surprised the first few days by the fireworks and the deserted streets (why does everyone stay inside?) but soon found his way into the Multimedia Computing group of EEMCS. Under the watchful eye of Alan Hanjalic and Cynthia Liem, PhD student Karthik Yadati figured out what music exactly does to the listener. ‘Little research had been done into how music affects or influences the listener in his or her work. I want to change that...’

Not so long ago you defended your thesis Music in Use: Novel perspectives on content-based music Retrieval successfully. Tell me, what exactly did your research involve?

My research involved building systems that can make music more useful to people. When you’re at work, you listen to music, don’t you? But sometimes it happens that a song distracts you from your work. You grab your phone, look for your playlist and click on to the next song. You’re fed up, because that one particular song has taken you out of your work flow. Very annoying of course! What I tried to do, during the last part of my PhD, was build an algorithm that easily and automatically detects where in a song you get distracted. So, give me a song, and I can detect exactly where in the song your work flow is endangered.

How did you manage to collect the right data?

In order to find out what distracts you while listening to music, I have collected data from users of Amazon Mechanical Turk: a crowdsourcing marketplace that enables individuals or businesses to use human intelligence to perform tasks that computers are currently unable to do. In order to avoid focusing on subjective components, I went in search of universal aspects. Based on this, I tried to get answers to questions such as which music supports you during your work? and which music distracts you? and that’s how I built that algorithm.

Please give some examples of what is experienced as distracting?

A sudden musical acceleration is often experienced as disturbing. And what if suddenly - out of nowhere - vocals appear while the song has been instrumental for some time? And then, for example, you have something like a drop: the moment when all musical tension is released and the beat starts to do its work. Just a few examples that I have included in my models. If you look at the playlists that can now be found online, you can see that most of them have been created manually. The algorithm I'm developing has to add something to the streaming landscape: that you automatically generate a list by analysing a song.

You seem to have a musical background of your own, right?

Yes, that's right. I have been interested in music since I was a child. When I arrived in the Netherlands, I contacted the Indian singer Samhita Mundkur. She founded Zangam - the first choir in the Netherlands to perform a repertoire of Indian songs. I joined the choir so that I suddenly participated in the performance of the first act of Satyagraha by Philip Glass and we also performed during the Royal Choir Festival in the Amsterdam Concertgebouw. Although I'm moving to Toulouse this summer, to work on a completely different branch of signal progressing and machine learning for Airbus, I hope that one day my algorithm will be used for therapeutic purposes. Is certain music recommended in medical situations? Making people healthy again with music, I'd really love that.