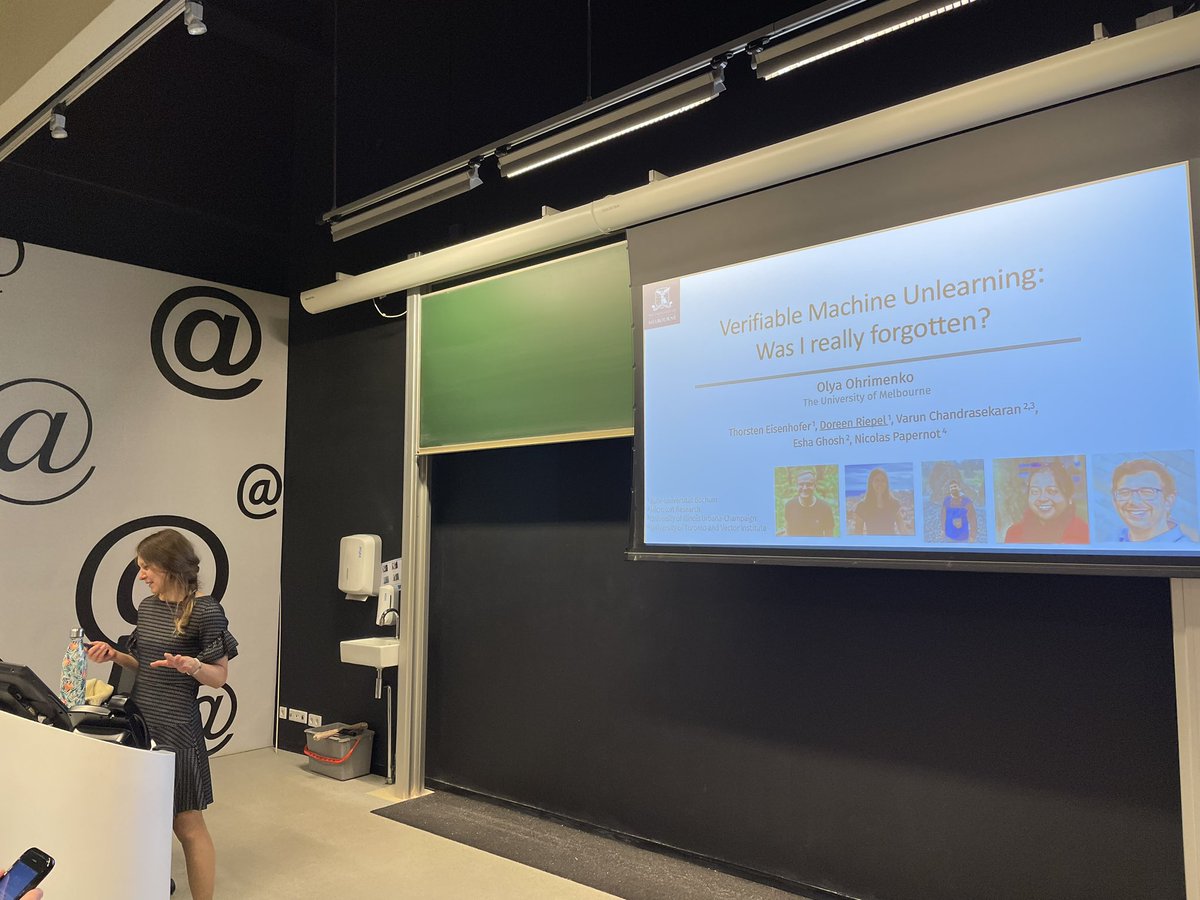

Verifiable Machine Unlearning: Was I really forgotten?

The right to be forgotten entitles individuals to request for their data to be deleted by a service provider who collects their data. Since data is often used to train machine learning models (e.g., to improve user experience), Machine Unlearning has been proposed as a way to remove data points from a model. However, how does the user know that the service provider indeed executed Machine Unlearning? The problem is not trivial as model parameters may be the same before and after data deletion. In this talk, I propose to view Machine Unlearning as a security problem. To this end, I will give an overview of the first cryptographic definition of verifiable unlearning that formally captures the guarantees of a machine unlearning system. I will then show one possible instantiation of this general definition based on cryptographic assumptions and protocols, using SNARKs and hash chains. Finally, I will present evaluation results of our approach on several machine learning models including linear regression, logistic regression, and neural networks. This talk is based on joint work with Thorsten Eisenhofer, Doreen Riepel, Varun Chandrasekaran, Esha Ghosh and Nicolas Papernot.

Olya Ohrimenko is an Associate Professor at The University of Melbourne, which she joined in 2020. Prior to that she was a Principal Researcher at Microsoft Research in Cambridge, UK, where she started as a Postdoctoral Researcher in 2014. Her research interests include privacy and integrity of machine learning algorithms, data analysis tools and cloud computing, including topics such as differential privacy, dataset confidentiality, verifiable and data-oblivious computation, trusted execution environments, side-channel attacks and mitigations. Recently Olya has worked with the Australian Bureau of Statistics and National Bank Australia. She has received solo and joint research grants from Facebook and Oracle and is currently a PI on an AUSMURI grant.