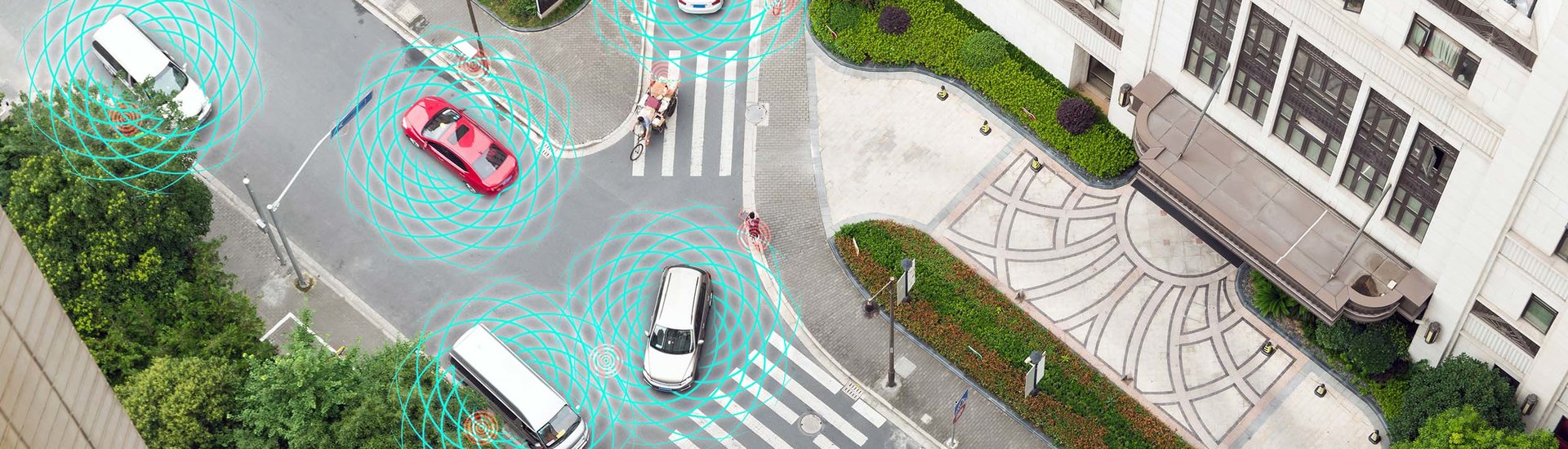

Who is responsible when self-driving cars are involved in accidents? Recently a self-driving Volvo in Arizona collided with a pedestrian who did not survive the accident. And in 2016 there was a fatal self-driving Tesla incident. Filippo Santoni de Sio of the TU Delft analyses the ethical issues around self-driving cars.

Many benefits are expected of automated driving systems (ADS), such as reducing congestion and fewer crashes. However, the first accidents have also occurred. Santoni de Sio: “How can risks of ADS be minimised and human safety be safeguarded? A responsible transition toward automated driving is desirable. That is what we are researching in the project "Meaningful human control over automated driving systems.’’

Responsibility gap

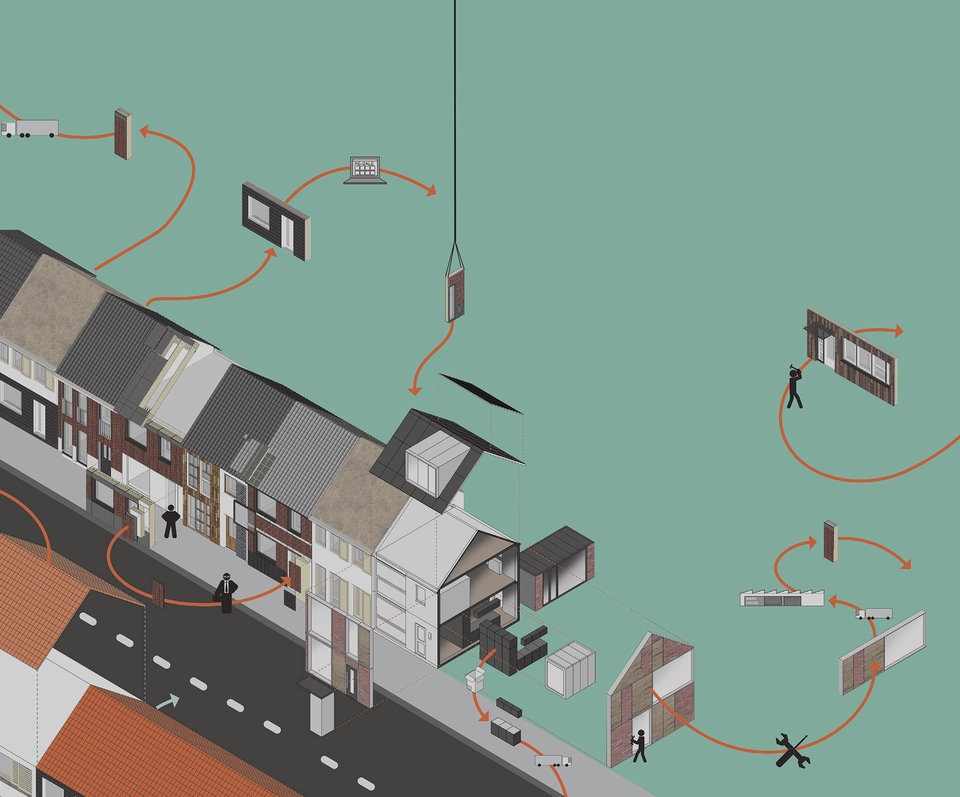

“This angle that has not been researched yet. ‘Meaningful human control’ has been identified as key for the responsible design of autonomous systems operating in circumstances where human life is at stake. This means that humans and not computers and their algorithms should be morally responsible for potentially dangerous driving. By preserving meaningful human control, human safety can be better protected and ‘responsibility’ gaps avoided.”

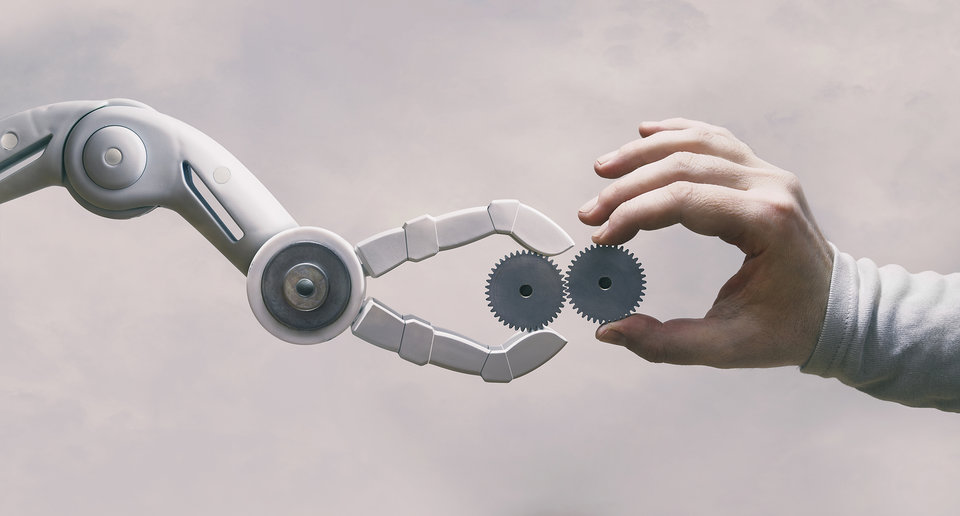

Optimal design

In an optimal design, humans and technology work together with the intention to minimise risk and guarantee an appropriate action when needed, for example when an automated system fails to intervene in a dangerous situation. “It is crucial however that a person is also capable and willing to comply with this distribution of tasks. Because besides driving, drivers will also need new skills such as monitoring and supervising the vehicle in an automated system. This must all be taken into account when designing the system.” This is why the project has a strong component of traffic engineering and behavioural science too.”, says Santoni de Sio.

Partial and supervised autonomy

The project will focus on meaningful human control in two types of driving automation modes: partial and supervised autonomy. These are the most likely types to emerge in the coming ten to fifteen years. Partial autonomy relates to vehicles that can be driven either manually or in automated mode, and supervised autonomy relates to fully computer controlled ‘pods’ continuously monitored by a (possibly remote) human supervisor.

Ultimate goal

However, there is no satisfactory theory of what meaningful human control precisely means in relation to ADS. The goal of this project is to come up with an academically solid definition that is also workable. Santoni de Sio: “Our team of researchers from TPM and Civil Engineering, consisting of philosophers, behavioural scientists and traffic engineers, will first develop a theory and then translate ‘meaningful human control’ into system design guidelines at a technical, regulatory and legal level together with industry and policy makers. Because human control should not only be incorporated in the driving systems level, but also in the systems beyond that system.

Results are expected in the year 2020. Santoni de Sio would like to set the bar high and even look beyond automated driving systems: “Preferably the definition can also be applied to other autonomous systems, for instance in healthcare or financial systems. That would be the ultimate goal.”

Filippo Santoni de Sio

More information

Filippo Santoni de Sio is assistant professor in Ethics of Technology and works at the section Ethics/Philosophy of Technology of the department of Values, Technology and Innovation (VTI). He recently started up a new MSc course in ‘ethics of transportation’.