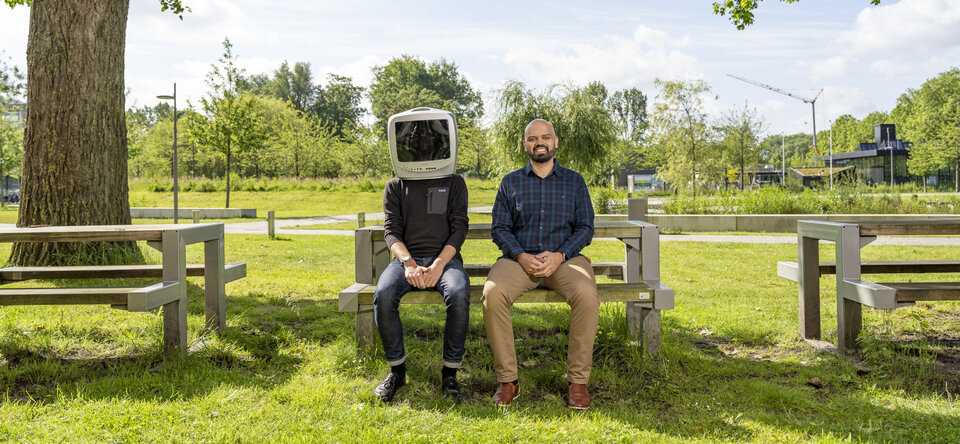

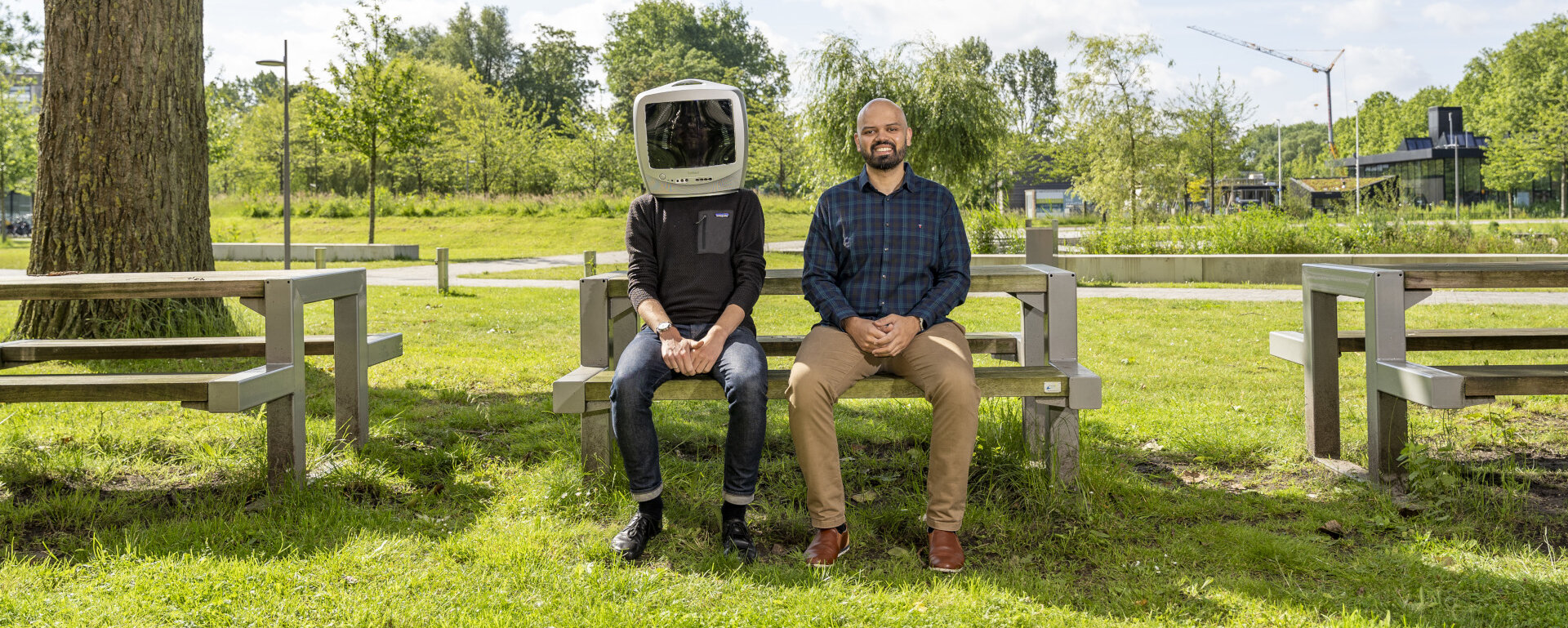

Social skills for digital humans

If anything, the Covid pandemic taught us that real-life interactions couldn’t be replaced by digital ones. This is just one of the reasons why Chirag Raman, assistant professor in the Pattern Recognition and Bioinformatics group, wants digital agents or avatars to interact with humans in a more seamless and lifelike manner. Using artificial intelligence and knowledge of human behaviour, he is teaching machines to understand and deploy human-like social intelligence – verbally, vocally and visually. At the horizon are technologies for a wide range of applications, from distance learning and e-healthcare to immersive games.

Why is a computer scientist interested in non-verbal communication?

This conversation via Zoom is, in some sense, an embodied interaction, because you're a two-dimensional image on my screen. While we’re talking, I'm also reading the non-verbal cues you’re giving. So, if you're nodding, I assume that I'm making some sense. Or if you frown, I assume that I've said something weird. Now imagine we're meeting not via Zoom but in a virtual or augmented reality situation. Can we translate your social cues to your digital avatar? And can we teach artificial intelligence to do the same?

Actually, realism is not always the holy grail. There are therapy settings where patients actually prefer an unrealistic digital avatar over a more realistic therapist. But having an understanding of social cues when generating behaviours for these avatars is always beneficial. For example, if you’re a patient in therapy, you may want to feel like you’re having a sense of rapport, that your therapist understands you. That’s when non-verbal expressions become relevant. There is literature that shows that if you mimic the person you’re talking to, they will feel like they gel with you better. In such cases, getting the digital agent to smile and nod at the right moment becomes really important.

Doesn’t that become a bit ... eerie?

If the visual realism and behavioural realism don't match, things get very creepy very quickly. Ten years ago, video games were much less realistic. That wasn’t a problem because the fidelity of how the avatars behaved matched the fidelity of how they looked. But now that we have these great graphics, a beautiful avatar that just keeps staring at you without blinking or without the subtle movements that humans use … that's very creepy, right?

Teaching an avatar to blink can’t be that difficult

Blinking is just one of many social cues. And interpreting the nuances of these cues is very subjective. For example, there is an open debate about what really constitutes smiling. Which muscles are deforming and how? If it's not accompanied by these crow's feet around your eyes, a smile could also be a grimace. And things get much more complex when you try to assign a social meaning to the expression, because the same expression can be perceived differently by different people in different parts of the world. This also makes it very difficult to objectively evaluate the avatar’s performance.

To make models more human-like, you first need to understand human behaviour.

I'm very interested in identifying causal relationships between behavioural patterns. When we’re talking to each other, there is a feedback loop of perception and response. A digital agent will respond more naturalistically if it understands these causal patterns between non-verbal behaviours. I’m currently working on linking causal reasoning with behaviour synthesis. For example, can we get artificial intelligence to predict someone’s behaviour by observing a slice of their interactions with others? Even forecasting low-level behaviours is technically a very hard problem for AI, because there are a lot of possible futures for the same sort of input. Research into causal patterns is still at the technical level, because it involves expressing existing social theory mathematically to make machine models more socially aware. But if we succeed, we can give new insights back to the social sciences.

What role do the social scientists play in your research?

I try to bring in people to complement the skills I have as a computer scientist. Ultimately, it matters less what our disciplines are – what matters is asking the right questions. There seems to be this general perception that if you're a technical university, there isn't a lot of collaboration with other people with different backgrounds. That’s not true! I want to be a bridge between computer science and the social sciences. A bridge needs to be anchored on both sides, but you also need people to view you as a bridge. They need to try and cross the divide.

You also work with partners from industry.

Yes, I've had the fortune of working with people at companies like Google and Microsoft who are, in a way, academics at heart. The idea is to pursue research that doesn’t need to benefit the company. My Google grant, for example, is an unconditional donation, no matter the outcomes. The real question with the dichotomy of industry versus academia is: can we make our science more accessible and open?

Seeing how the AI field expanded over the last six months, I'd be very hesitant to predict how it will look like in ten years’ time!

These are exciting times for AI.

Yes, there's been an explosion in terms of both the capabilities of machine learning and the attention it has been receiving from the general populace and the media. People can now play with machine learning techniques without having to implement them from scratch. That also means that there’s less understanding what these tools do and do not do, under the hood. There’s a risk of unrealistic expectations, when people no longer understand the limitations of the technology. Research is exploding, too: last year, no less than ten thousand papers were submitted to the Conference on Neural Information Processing Systems. Luckily, ours was among the accepted ones. To be honest … seeing how the AI field expanded over the last six months, I'd be very hesitant to predict how it will look like in ten years’ time!

![[Translate to English:] CHirag Raman](https://filelist.tudelft.nl/_processed_/1/1/csm_PORTRET-Chirag_151bfdb6ce.jpg)