Whereas algorithms are good at keeping planes airborne and forthcoming self-driving cars on the road, algorithmic decision-making by the government puts civilians at ever-increasing risk. Unless we apply lessons from systems and control engineering to the design processes and governance of public algorithmic systems, like Roel Dobbe does.

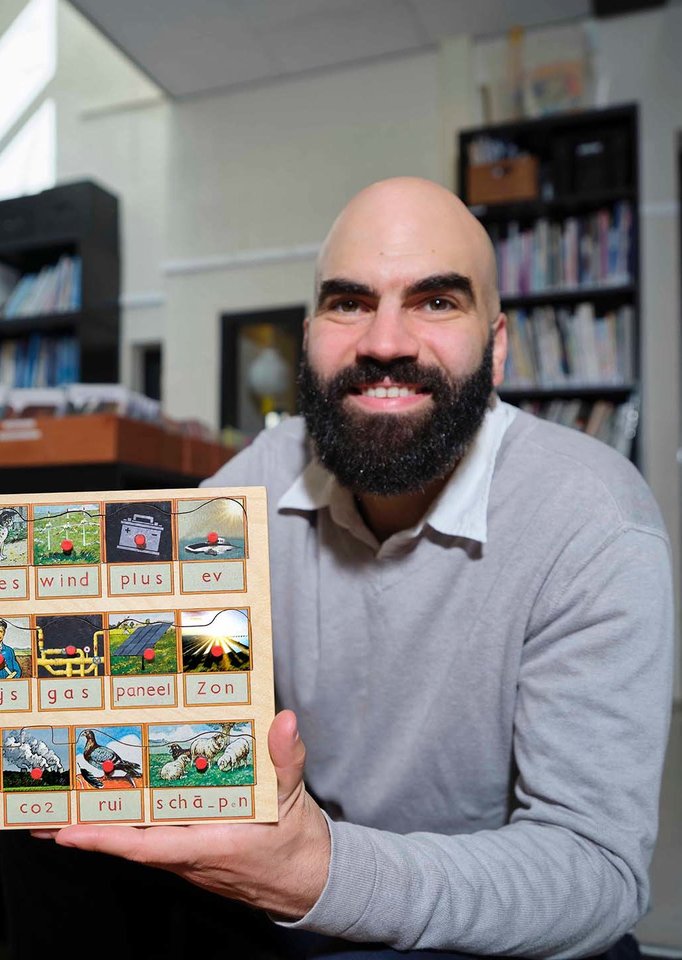

The Dutch childcare benefits scandal and the role of the ‘System Risk Indication’ in combating welfare fraud are just two recent examples of a storm Roel Dobbe saw coming about a decade ago. He traded his consultancy job for a PhD at Berkeley, investigating the social implications of data-driven technology. For him, this was all about society benefitting even more directly from the lessons he learned in systems and control engineering for the design and optimisation of complex processes.

‘We must give very careful consideration to the automation of our critical infrastructure – a bridge, our power grid, but also social security. It not only increases the probability of mistakes happening, but mistakes can also quickly cause harm to a lot of people, especially in the social domain. My opinion in this matter is quite controversial: we should not unleash these systems into our society if we cannot proof them to be consistently safe, just and aligned with other crucial public values.’

Thin ice

The meteoric rise in the use of artificial intelligence (AI), next to the “traditional” rule-based algorithms, is yet another complicating factor. Dobbe: ‘With millions or even billions of parameters, nobody really understands how AI works and what can go wrong. And as a government official, you have very limited control over how safety and justice are safeguarded in the design. Put simply, as a public organisation you tread on very thin ice when using AI. Unfortunately, we notice that confidence in inherently problematic applications remains high.’

We must give very careful consideration to the automation of our critical infrastructure, such as the power grid or social security.

According to Dobbe, systems and control engineering offers much needed insights for the design, management, and supervision of public algorithmic systems. ‘The concept of system safety was originally developed to keep planes airborne, and fly them safely from A to B. But it is just as applicable to energy systems, healthcare, and social security. In current policy and the automation thereof, it is common to focus on accountability and transparency. Though certainly useful, it doesn’t guarantee proper functioning of these systems, nor does it ensure safe and just outcomes.’

A socio-technical perspective

Next to the algorithm itself, system safety is very much about the organisation (with its own complexity) within which the algorithm is deployed, and which takes decisions based on algorithmic outputs. Dobbe: ‘Who will use the algorithm, where and how? Has reasonable supervision been implemented? And should things go awry, what options are there for a citizen to object, to minimise the impact of an error, and to seek reparation for damages? In answering these questions, we do not only apply a technical perspective to system safety, but also a social, governance and humanities’ perspective: a socio-technical approach, in short.’

No system safety without an active safety culture. ‘It has long been known that it is vitally important to allow potential issues to be discussed without the risk of repercussions. It prevents small issues from growing into big problems and accidents. The current problems at the Dutch Tax and Customs Administration are a case in point. The prevailing culture based on fear and blame makes it impossible to give priority to the interests of those damaged by the childcare benefits scandal, thereby increasing their distress. It’s a classic tale for how things go wrong in the history of automation in complex systems.’

Tunnel vision

Unlike the engineering sciences, the social domain doesn’t have a history of system safety. However, historical lessons certainly proved valuable when Dobbe and one of his master’s students scrutinised the Wajong benefit (a disability provision for young workers). Eligibility for this benefit is determined by a combination of fully automated and (algorithm supported) human decisions. Dobbe: ‘It became apparent that benefit holders are especially at risk of harm when one organisation, such as the Tax Administration, makes changes to its decision-support algorithm without verifying the implications for benefits or taxes administered by other organisations, such as the Netherlands Employees Insurance Agency (UWV) or the Social Insurance Bank (SVB). It is the citizen that ends up falling victim to such ripple effects.’

The many rules and regulations currently being implemented to ensure the responsible design of algorithms do not yet consider these ripple effects and the consequences they have for citizens. ‘I consider it my calling to bring this to the attention of policymakers, to show them that they are in need of a different perspective, and to develop the appropriate tools and solutions.’

Pioneering work

A daunting task, to say the least. After ten years it still feels like pioneering work to Dobbe. ‘It’s a good thing that I left the US and returned to Delft. And it was only logical for me to join the faculty of Technology, Policy and Management (TPM). My systems and control expertise in combination with the socio-technical approach of TPM truly offers a suitable perspective for understanding and addressing these complex issues.’

Part of his work consists of empirical research. ‘We have our PhD and master’s students make first hand observations from within an organisation. What policies and regulations are in place, and what systems do they use? For AI systems in particular, we look at any trade-offs involved. Is there tension between public values and how can this impact citizens and professionals?’

Occasionally, you must hit the brakes on an AI-system that simply isn’t a solution, or is inherently unsafe or unjust.

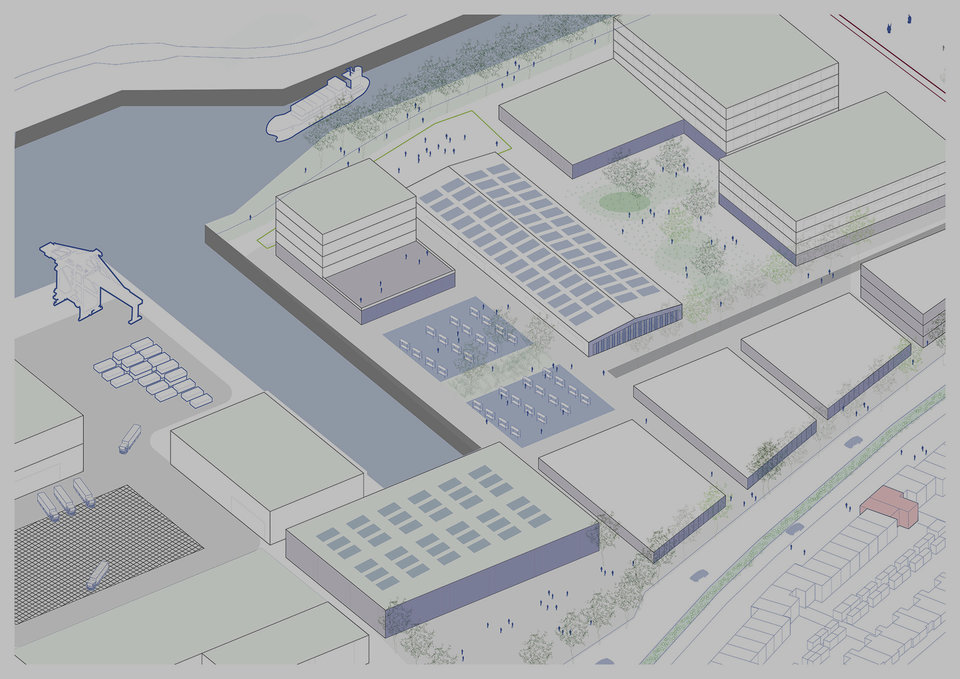

Part of his theoretical research involves Machine Learning (a form of AI). ‘We want the machine learning practice to become more “socio-technical”. How can we, for example, help this quite technical world to also consider criteria from policies and regulations, including the social issues you may run into when releasing a machine-learning model into the real world?’ An important open question is how to define and preserve safety and meaningful human control, in particular for vulnerable people. ‘To do this, we must bring together multiple disciplines and, more importantly, the various stakeholders. This year, we will therefore establish a Transdisciplinary Centre of Expertise for Just Public Algorithmic Systems – a partnership between governments, public parties, companies, and knowledge institutions that will be housed in The Hague.’

An exciting and worthy cause

Thanks to his pioneering work, Dobbe has frequently been consulted by various parties from the public domain. In public administration, he was involved in the creation of both the algorithm watchdog and the algorithm registry. He and his group also introduced systems thinking at the consortium for “Public Control of Algorithms” – involving many public organisations from all over the country that build policy solutions, supported by the Ministry of Interior Affairs. His lessons regarding the introduction of artificial intelligence reach well beyond the public services sector, as he addresses similar issues in the health and energy domains.

For now, the role of scientist tackling these urgent and dynamic social themes fits him like a glove. ‘It is an exciting and worthy cause, to have these pressing issues serve as a basis for acquiring new scientific knowledge while also developing practical frameworks and guidelines,’ he says. ‘And to occasionally hit the brakes on an AI-system that simply isn’t a solution to a particular problem, or when it’s implementation is inherently unsafe or unjust.’