Probabilistic & Statistical Fuzzy Systems

This is my initial WEB PAGE on PROBABILISTIC &STATISTICAL FUZZY SYSTEMS.

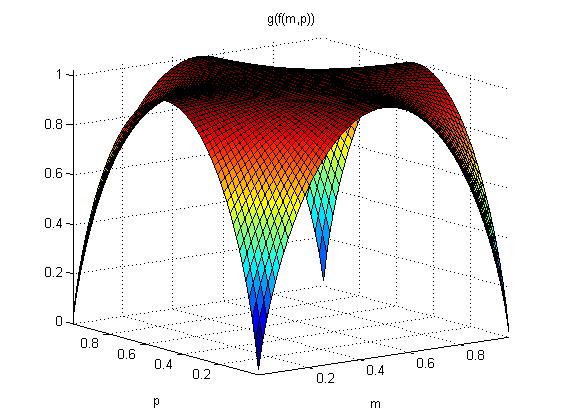

As a starting point a visualization of the

- entropy H = H (m,p) of a binary probabilistic fuzzy information source

- generating two complementary fuzzy events defined by membership functions (m, 1-m) and (1-m, m) respectively

- according probability distribution (p, 1 - p).

It concerns a generalization of the entropy of a binary source generating two crisp events with probability distribution (p, 1 - p), a well-known example lectured in lessons on information theory, a theory founded by Claude E. Shannon in 1948.

This works concerns an extension of the work presented at the SMPS conference in Oviedo, Spain (2004). The figure shown here was presented at a session of fuzzy statistical analysis of ERCIM09, held October 29-31, 2009 in Cyprus.

From the figure we can observe (although certain people thought this is not the case) that the surface of the function shown in the figure is almost everywhere concave where, for both m = 0.5 and p arbitrary, and p = 0.5 and m arbitrary, the entropy H equals 1. It should further be clear that, in the mathematical sense, for this very specific example, the fuziness m and the probability p play an equal part in quantifying the overall statistical fuzzy entropy H. Mathematical details are not shown yet here but the basics can be found in the paper presented at the 2004-SMPS conference mentioned above.

The notion of Probabilistic Fuzzy Entropy can be applied to calculate the Statistical Fuzzy Information Gain when inducing Statistical Fuzzy Decision Trees in case data sets are available with fuzzy input and/or fuzzy output (classification) data based on a fuzzy partitioning of both the combined (input and output) sample space.