I actually knew nothing about speech, but at the very first lecture, I knew I was in the right place.

“Siri, find the quickest route home.” An instruction like that given in a quiet room is no problem at all for Apple’s virtual assistant, used on mobile phones and tablets. But if you ask the same question in a busy restaurant or with a strong regional accent, Siri will find it slightly more difficult to understand what you said. This is not pure chance. Speech recognition software currently only works effectively if the person talking to it can be clearly understood, has

reasonably standard pronunciation and there is little background noise. If not, we hit the limits of current technology, not only in the case of Siri, but also Google or Amazon, for example, who use similar software.

“Currently, speech recognition has little to offer to people with speech impediments, the elderly or someone who cannot speak clearly because of an illness. These are the people who could benefit most from it, for communicating with friends and family or controlling appliances and devices, for instance,” says Odette Scharenborg. As associate professor in TU Delft's Faculty of EEMCS, she is researching automatic speech recognition, exploring how you can teach a computer to recognise languages more effectively.

Psychology of language

The current technology works thanks to a huge body of data that is used to train speech recognition systems. The body of data is made up of two parts: speech and the textual description (verbatim) of what was said (the transcription). Recordings are made of the speech of people having conversations, reading texts aloud, speaking to a robot or computer or giving an interview or a lecture. All of these hours of audio material are written down and an indication is given of where there is background noise or where noises occur made by the speaker, such as laughing or licking their lips. But this system falls short. It is simply impossible (in terms of time, money and effort) to collect and transcribe enough data for all languages and all accents. Partly because of this, the focus has been on collecting data from the ‘average person’, which means that the elderly and children are not properly represented. People with speech impediments can also find it difficult and tiring to speak into a machine over long periods. Equally, something said by someone with a foreign accent will often not be properly recognised because of a lack of training data.

There are also some unwritten languages that are still spoken widely. “Take the Mbosi language in sub-Saharan Africa, for example, a language spoken by around 110,000 people. For the existing speech recognition systems, there is simply not enough training material available for that language. In addition, the main problem is that training a speech recognition system not only calls for the speech data, but also a textual transcription of what was said. Of course, you can record a lot, but quite aside from the fact that it takes a lot of time and costs money, transcribing what was said is not possible in an unwritten language,” says Scharenborg.

Is it possible to develop a new form of speech recognition that does not use textual transcriptions, bringing an end to the exclusion of certain groups? This is what Scharenborg is currently researching. She is taking a fundamental look at automatic speech recognition. This involves such issues as how you recognise and categorise sounds. She is also exploring the psycholinguistic side, the psychology of language.

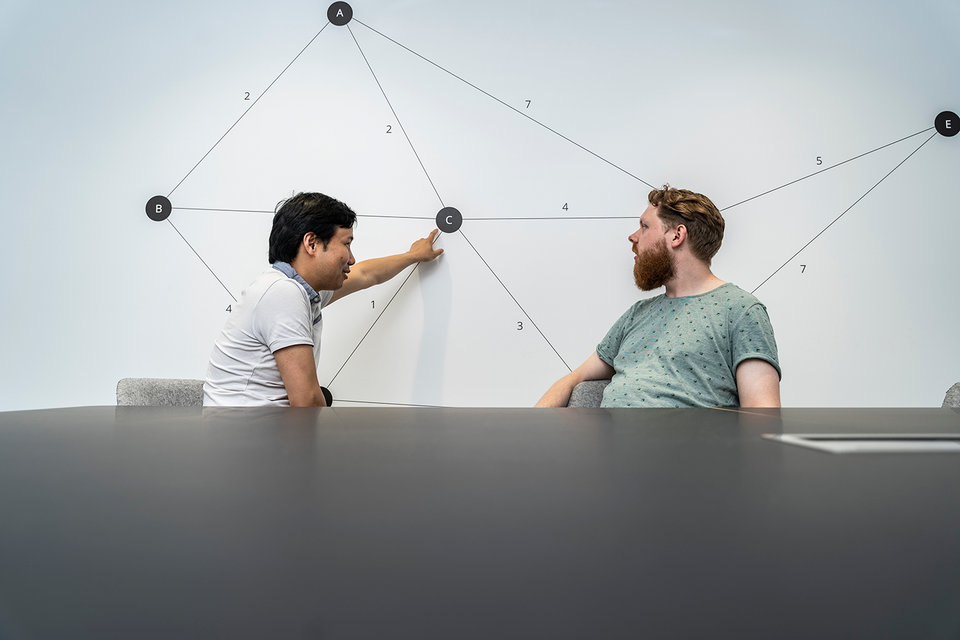

In her current research, Scharenborg is combining insights from psycholinguistics with the technology of automatic speech recognition and deep learning.

New phonetic categories

In one of the methods Scharenborg is researching, she is looking at the sounds of languages and how they differ and what they have in common. Every language has its own set of sound classes, or phonetic categories, and associated pronunciation. Take the pronunciation of bed, bet, bat or bad in English. “If a Dutch person pronounces these words, they will sound the same to many people. But that’s not the case for an English person, for whom these are four different words with four different pronunciations,” says Scharenborg.

So even in languages like Dutch and English, which have a lot of overlap, there are different sounds (the vowel in bed and bad) and the same sound is sometimes pronounced differently (the d and t at the end of the word sound the same in Dutch but different in English). “When developing a speech recognition system for an unwritten language, you can take advantage of the things that languages have in common. For example, the Dutch e sounds approximately like the English e, but the a in bat sounds something like a cross between the Dutch a and e. You can use that knowledge to train a model of the English a based on a model for the Dutch e,” explains Scharenborg.

Whenever we learn a new language, we create new phonetic categories. For example, when a Dutch person learns the English word the. This is different from the familiar Dutch de or te or the a in bad or bat mentioned earlier. The researchers aim to teach the computer this process of creating a new phonetic category. In the future, this should enable speech recognition to teach itself to learn languages in this way and recognise pronunciation, even if it deviates from the average.

Safe door

The approach is based on experiments that Scharenborg conducted with human listeners. In them, test subjects listened to sounds or headphones. “L and r are two separate phonetic categories, but you can also mix them artificially. The sound you then pronounce sounds just as much like an r as an l. We gave test subjects words that ended with the letter that sounds something like a cross between r and l. If you give them the word peper/l, they interpret the sound as a r, because it makes peper, which is actually a real Dutch word (pepper). After that, the test subjects start classing it as an r. The opposite happens if the same sound is given in the word taker/l, when people will interpret the same ambiguous sound as l, because takel is a Dutch word and taker isn’t. You actually retune it in your head.”

For these listening experiments, test subjects sit in a room that has been specially developed. On the ground floor of the Mathematics & Computer Science building, Scharenborg points to a cabin, roughly the size of a garden shed. She opens the heavy, wide door. It is so thick that it looks like a safe door. “It’s in here that we do the experiments. There’s no distraction from other sounds. Can you feel the pressure on your ears? That’s because the room is low-noise,” says Scharenborg.

The data that the scientists collect in the test is being used to make a new model that should ultimately result in better speech recognition. In it, the model itself judges whether or not a new phonetic category is needed and you no longer have to enter it. This is a major step forward for automatic speech recognition.

In the cabin, there is a chair and a desk. On that, there are headphones and a computer. On the screen, test subjects can read words and they can hear sounds via the headphones. There is also a window. This ensures that the testers always have contact with a supervisor who can see if everything is working as it should.

The data that the scientists collect in the test is being used to make a new model that should ultimately result in better speech recognition. In it, the model itself judges whether or not a new phonetic category is needed and you no longer have to enter it. This is a major step forward for automatic speech recognition. The model was inspired by our brain and our deep neurological system, says Scharenborg. It is known as a deep neural network. “We are the first to investigate self-learning speech recognition systems and we are currently working with a colleague in the United States to develop technology for this. I expect to see the first results within two years.”

Bridging the divide between humanities and sciences

Will this soon produce an effective system that can rival Siri? We cannot really expect that any time soon. The technology is still in its very early days. Scharenborg's research is primarily fundamental in nature. Despite that, it is laying the foundations for a new type of automatic speech recognition. A program that works perfectly is still a distant prospect.

In a previous NWO Vidi research project, Scharenborg also explored speech recognition. “Everyone knows that it’s harder to understand someone you are talking to if there's a lot of background noise. It becomes even more difficult if someone isn’t speaking their native language, but another language because they want to order something to drink in a busy café on holiday. But why is it so much more difficult to understand each other when there’s background noise? And why is this more difficult in a foreign language than in your native tongue? I was keen to find that out and ended up learning more about how humans recognise speech.”

In her current research, Scharenborg is combining insights from psycholinguistics with the technology of automatic

speech recognition and deep learning. Normally, these are separate fields. Psycholinguistics is mainly the preserve of scientists and social scientists and focuses on language and speech and how our brains recognise words. Speech recognition and deep learning are about technology and how you can use it to make a self-learning system.

“In order to make further progress, we need to combine both fields. It seems rather strange to me that there are not more people who combine them. The aim of the people and the machines is the same: to recognise words,” says Scharenborg.

The academics working in the two different fields often don’t even know each other. This is something that Scharenborg aims to change. For years, she has been organising the largest conference on the subject of speech recognition, bringing together both communities. “Both groups still focus too much on their own field. For applied scientists working on deep learning, speech is a type of data that they use to develop technology. However, psycholinguistic researchers often have relatively little knowledge of computers. In my view, these fields complement and can learn from each other, in terms of knowledge about both speech and computer systems. I want them to engage in discussion.”

The current technology works thanks to a huge body of data that is used to train speech recognition systems.

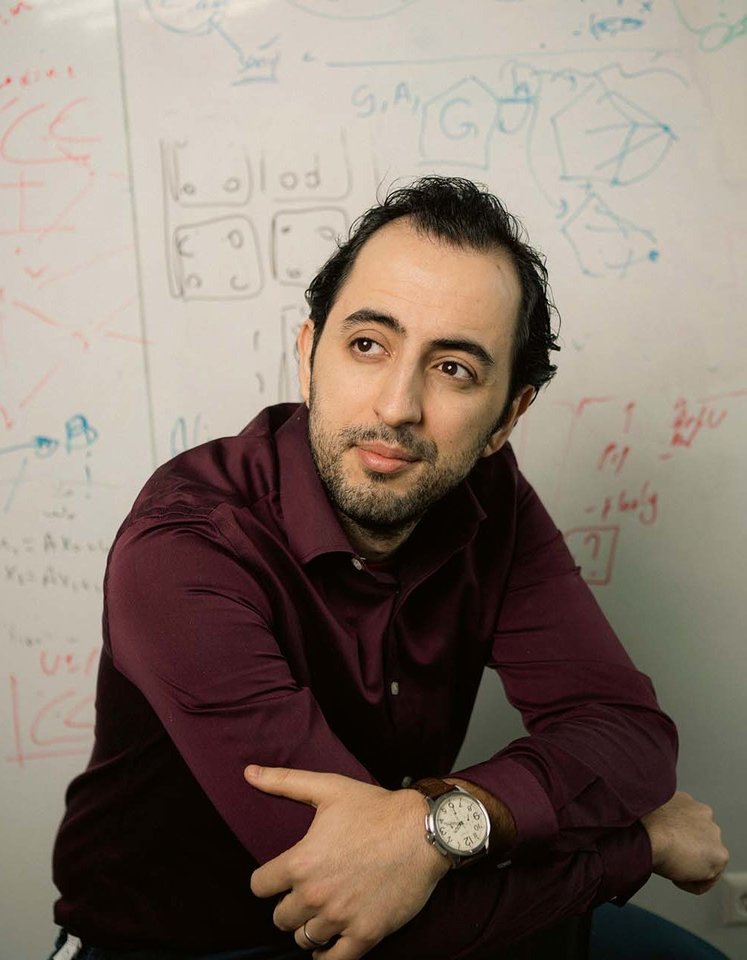

Knight Rider

Scharenborg has extensive experience of both fields and therefore aims to bridge the divide. Before joining TU Delft, she was associate professor in the Linguistics department at Radboud University. “My own field is speech recognition, but I also work with psycholinguistics. It makes logical sense for me to do my research at TU Delft now, because the technical expertise is here and research is conducted across disciplines. That’s why I don’t feel like an outsider, even though I’m virtually the only person in the world doing this research.”

Even at secondary school, Scharenborg was good at both humanities and science subjects. “I was unsure about what to study, which is why I chose a programme focusing on language, speech and computer science at Radboud University. I actually knew nothing about speech, but at the very first lecture, I knew I was in the right place. Almost everyone talks to each other and a lot of communication is done through speech. You can look at it from a physics perspective by focusing on waves that propagate speech, but also explore how people recognise it. Being able to approach it from different sides really appealed to me.”

Without actually realising it, she was already working with speech. “For example, I really loved the TV series Knight Rider. In it, David Hasselhoff played the hero Michael Knight, who could speak to his car KITT. This supercar not only understood what was said, but also talked back and was intelligent. Knight and KITT also used a wrist watch to communicate when they were apart. Even then, I found it really interesting and that fascination has never gone away – I’m still working with speech and technology.”

Text: Robert Visscher | Photo Odette: Frank Auperlé, photos cabine: Dave Boomkens.