Autonomous systems must adapt their behavior and operations in accordance with particular circumstances. With increasing developments in machine learning, sensors, and connectivity technologies, applications for autonomous systems broadens and its implication creates increasingly concerns in our society. We need to assure that such systems remain under meaningful human control, i.e. that humans, not computers and their algorithms, are ultimately in control of and thus morally responsible for relevant actions.

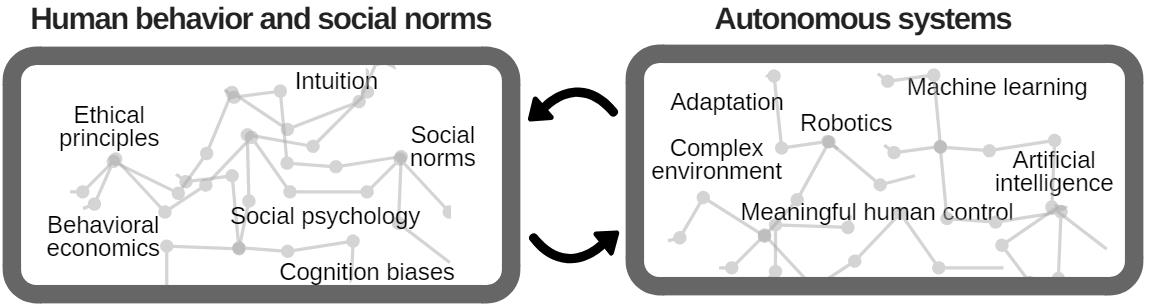

Human-machine interactions and agreement to societal and ethical norms may be limited by a poor understanding and modeling of human (moral) reasoning processes and ethics. The incorporation of human-centric moral reasoning and the possibility to handle uncertainty on a (meta)reasoning level into autonomous systems could counterbalance unforeseen and undesirable potential shortcomings of Artificial Intelligence (AI) and robotics.

In this project, we aim to incorporate uncertainty (e.g. normative uncertainty, context-dependency, and bounded rationality) into autonomous systems to achieve meaningful human control. Built on top of an extensive body of knowledge of moral responsibility and ethical theories, we will propose methods and metrics to support the design and engineering of accountable and trustworthy autonomous systems with some degree of “conscience”. We will apply state of the art machine learning and agent-based simulation, using features inspired by human cognition and sociological processes.

This is an interview with Luciano Cavalcante Siebert, from July 2019.

Interview and text: Agaath Diemel.

Social media, our smartphones, algorithmic decision-making systems: we can clearly see the increasing influence of technology on our lives, and it is not always a good thing. A lot of technology is profit-centric rather than human-centric. We should take back ownership, otherwise at the current rate of exponential development, things may get out of control in another ten years from now. That is why at AiTech propagates the concept of meaningful human control. You should always be able to trace a decision taken by a robot or computer back to a human decision, and autonomous systems should be able to understand and adapt to moral reasons relevant to the people with whom they interact or affect. We cannot defer that responsibility and hide behind saying the AI told us to do something.

AI should understand how humans reason

When smartphones or smart cars act on our behalf, do they really understand what we feel or want? And how can such systems act appropriately unless they do? Machine reasoning is different from human reasoning, because machines are architecturally different from humans, but they should understand how we reason and be inspired by it. It was during my years at LACTEC, the Brazilian Institute of Technology for Development, that I started looking into human reasoning. There, I was working on demand response management programmes for the energy market. To make people be more efficient with energy, you should first understand how they act in practice. What happens in real life is never simple; so many factors are influencing people’s behaviour. For example, do they buy an electric car because it is sustainable or as a mark of their social status? And once they have it, will they continue their sustainable behaviour, or will they consider they are already doing their bit?

Practical ways of dealing with ethics

On my research project we are trying to incorporate human reasoning processes into artificial intelligence. That will make machine decisions more understandable and less of a ‘black box’. We focus on ethics, because it is important that artificial intelligence can support the moral values we hold as a society. Humans have been thinking about ethics since ancient times and there are many philosophical theories on ethics; in real-life situations, however, we have practical ways of dealing with this. We evaluate each situation, weighing in feelings like empathy or guilt and use moral heuristics, mental shortcuts, to come to a decision. We know that killing is morally wrong; so is lying. But how about lying to save someone’s life? That is a situation that entails moral uncertainty. Machines should take into consideration that not everything is certain when making decisions based on morality. This uncertainty should also be a decision factor: you can act on uncertainty, or you can decide not to act. Humans do this all the time.

Combining human reasoning with machine learning

How can a machine understand what the person it is interacting with is thinking? Theory of Mind plays a role here, the ability to think about your own mental state and that of others. To make machine thinking more like human thinking, we are trying to combine human-like reasoning with machine learning. We will look for variations of human behaviour for specific situations and contexts that we can try and incorporate so machines can learn from them. At the same time, we want machines to go beyond that: they should not do anything that is detrimental to society as a whole. That is why we are also trying to incorporate ethical theories.

Reaching decisions in real time

This is not easy in control situations where the machine in question operates in real time. An autonomous car, for example, cannot ask its driver whether it should brake or not; there simply isn’t the time. So what is the right decision in the given context? It’s goal is not just to maximise the happiness of everybody around it, or to follow the law. To reach a decision, the vehicle should track what is going on around it, who are the people affected directly and indirectly by its decision, taking into account any cascading effects. Just like humans do, it should consider all these factors and then deliberate real time about the best options: a, b or c, or a combination of these, or none of them.

People can change their standards

One way we are researching this is with the so-called ultimatum game. That is a problem in economics that has been studied for decades. It works like this: you get a certain some of money, but you only get to keep it if you share some of it with another player. How much you offer that player is up to you, but if they refuse, neither of you gets anything. Two values play a role here: wealth and fairness. Should I maximise my profit or play fair? That is a moral question. Social norms also play a big role here. You can be inclined towards fairness, but if every other player just offers you a small percentage, you will change your behaviour accordingly. Work has been done before that shows that computer agents playing this game show similar responses to people playing it. We are now making a system play this game and then evaluate these drivers – wealth and fairness – in order to learn to identify the moment that people change their standards. The first step towards intervention is to notice when behaviour is inappropriate.

Kill the driver or the pedestrian?

Another way to move forward is with the help of MIT’s database from the Moral Machine. The Moral Machine is a serious gaming platform where people are asked to give their preferences for decisions made by machine intelligence such as self-driving cars. They are given scenarios where they have to choose between e.g. letting an autonomous vehicle kill its driver or a pedestrian crossing the road. But what if the pedestrian is a child or on older person, a man or a woman, someone you know or someone you don’t know? Does that alter your perspective? These may not be a real-life situations, but these questions have serious ethical complications. We are going to use the data and try to match them to ethical theories. Do we encounter just the utilitarian view of minimising casualties, or are there maybe regional perspectives, do they match to any ethical theories at all?

Of course, in two years’ time we won’t have the definitive answer on meaningful human control. But I am sure we will have made progress – at a practical level, by improving algorithms, but also at the level of understanding how we can incorporate philosophy and human behaviour into AI, and how we can design AI systems accordingly. That interdisciplinary approach is key to AiTech.