Intelligent decision making in real-world scenarios often requires that an agent plans ahead, while taking into account its limitations in sensing and actuation. These limitations lead to uncertainty about the state of environment, as well as how the environment will respond to performing a certain action. Planning under uncertainty given probabilistic models is captured by the Markov Decision Process (MDP) framework. The MDP model assumes full knowledge of the state, which in many realistic problems is impossible or prohibitively expensive to obtain. To address this problem Partially Observable MDPs (POMDPs) have been proposed, in which information regarding the environment’s state is only obtained through noisy sensors with a limited view.

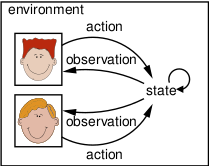

When considering multiagent decision making problems, centralized MDP/POMDP solutions are not sufficient, for reasons of scalability and communication constraints. The canonical model that has emerged is a decentralized extension to centralized model, called the Decentralized POMDP (Dec-POMDP) model. Dec-POMDPs model local perception, interaction and communication, and local actuation and are studied in an active area of research referred to as multiagent sequential decision making (MSDM) under uncertainty.

In our group these techniques are studied in the context of the following projects:

- Gaming beyond the copper plate

- Dynamic Capacity Control and Balancing in the Medium Voltage Grid

- Smoover

- Dynamic Contracting in Infrastructures

More information such as software, tutorials, workshops and benchmark problem can be found at http://masplan.org or by contacting Matthijs Spaan.