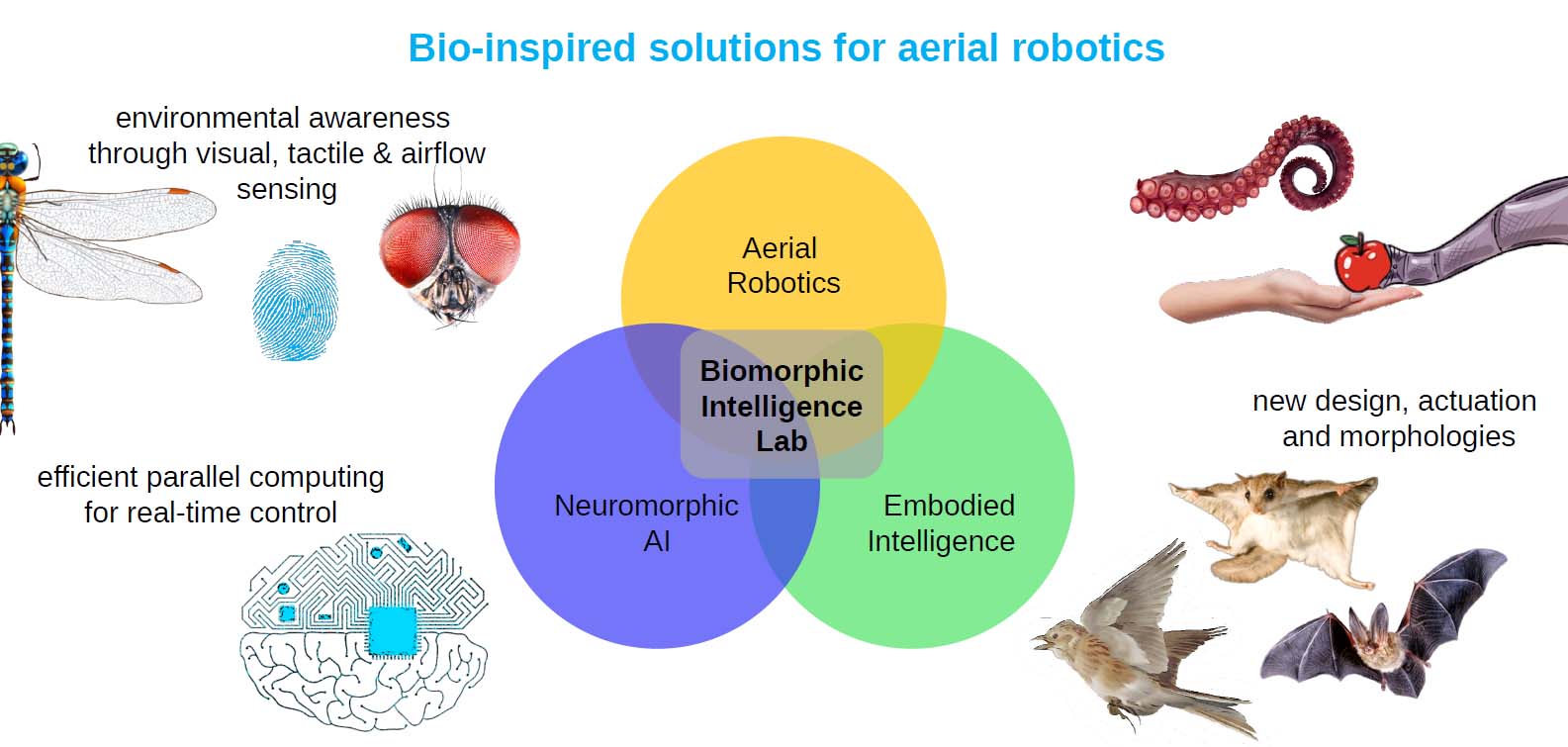

BioMorphic Intelligence Lab

Biologically inspired solutions for aerial robotics

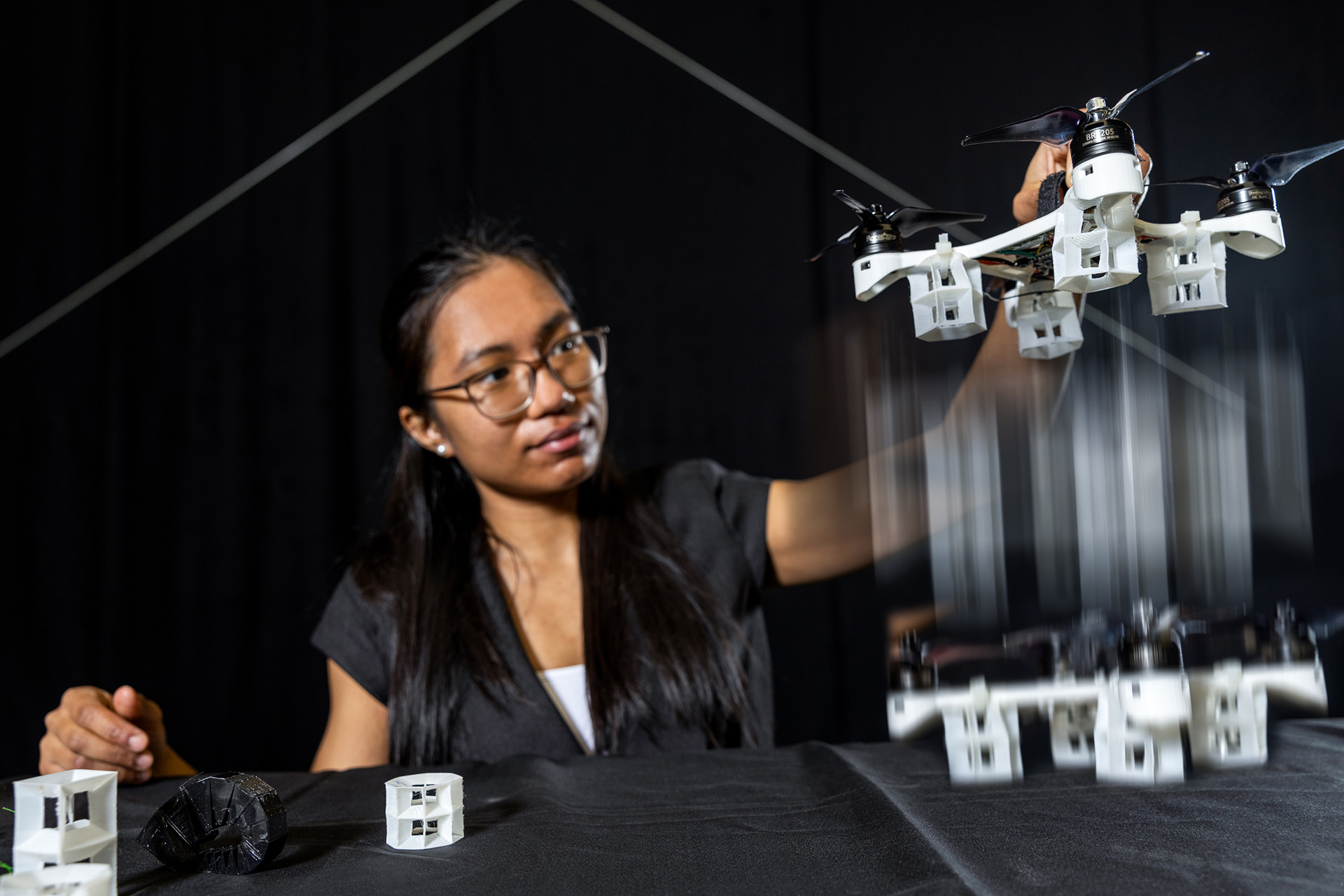

Aerial robots are now ubiquitous. Thanks to their nimbleness, manoeuvrability, and affordability, drones are used in many sectors to monitor, map, and inspect. As a next step, flying robots offer more when interacting with their surroundings via anthropomorphic-like manipulation capabilities. Some overarching challenges remain for this new class of aerial robots, and solutions inspired by biology can be implemented across three key areas for robot performance: sensing their environment, processing this information, and acting upon the results.

SENSE

Bio-inspired perception (e.g., visual or tactile feedback) can provide the drone with information on its environment, mimicking animals’ sensory feedback. Using retina-like event cameras, drones can avoid obstacles and detect objects at a fraction of the power and latency of conventional hardware and algorithms. Enhancing tactile feedback can also prompt different behaviors in response to different force stimuli.

THINK

Bio-inspired, brain-like models from Neuromorphic AI can help lower the computational load and speed up sensory data processing for navigation. This boosts real-time control and autonomy. Compliance embedded in the control of the robot also favors safe and robust interaction with unknown environments and targets.

ACT

Bio-inspired design and materials make the drone's body fit for interaction with unknown objects and enable a safe response to external disturbances. Robot morphology can be inspired by flying animals’ shape, configuration, and materials. Together, these features create embodied intelligence and can partially offset the behavior complexity handled by the brain.

The BioMorphic Intelligence Lab aims to tackle robustness and efficiency challenges for interacting drones, using biologically inspired solutions for both the 'body' and the 'brain' and applying embodied intelligence and neuromorphic AI techniques.

The BioMorphic Intelligence Lab is part of the TU Delft AI Labs programme.

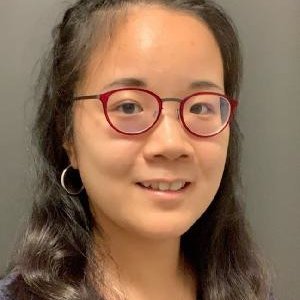

The Team

Education

Courses

2024/2025

- Physical Interaction for Aerial and Space Robots | AE4324

- Fundamentals of AI | IFEEMCS520100

- Data Mining | CSE2525

- Joint interdisciplinary project | TUD4040

- Capstone Applied AI Project | TI3150TU

- RO Msc Thesis | RO57035

- Research Project | CSE3000

- Computer Science program | IN5000

- Bio-inspired Intelligence and learning for Aerospace Applications | AE4350

Master projects

Open for master thesis

Ongoing

- Autonomous Forest Canopy Exploration, Salua Hamaza, Rita Santos (2023/2024)

- Design of an Inherently Balanced Aerial Manipulator, Salua Hamaza, Michele Bianconi (2023/2024)

- Autonomous Quadrotor Perching, Salua Hamaza, Georg Strunck (2023/2024)

- Time steps in spiking neural networks, Nergis Tomen, Alex de Los Santos Subirats (2023/2024)

- Self-organized criticality in spiking networks of non-leaky integrator neurons, Nergis Tomen, Luca Frattini (2023/2024)

- NeuroBench: Benchmarking spiking and analog neural networks for primate tracking sata, Nergis Tomen, Paul Hueber (2022/2023)

Finished

- Design of an inherently fully dynamically balanced aerial manipulator with omnidirectional workspace, Alexander Bom (2024)

- Towards a Robust Wireless Real-Time Ecological Monitoring System, Luke de Waal (2024)

- A lightweight quadrotor autonomy system, Andreas Zwanenburg (2024)

- Aerial Perching via Active Touch: Embodying Robust Tactile Grasping on Aerial Robots, Anish Jadoenathmisier (2023)

- Automated Aerial Screwing with a Fully Actuated Aerial Manipulator, Salua Hamaza, Paul Reck (2022/2023)

- Vision-based Quadrotor Perching on Tree Branches, Salua Hamaza, Seamus McGinley (2022/2023)

- Simple Online Visual Object Tracker Fusion based on Distributed Kalman Filtering, Nergis Tomen, Yigen Zhong (2022/2023)

- Optimizing Event-Based Vision by Realizing Super-Resolution in Event-Space: an Experimental Approach, Nergis Tomen, Mahir Sabanoglu (2022/2023)

- Optical Flow Upsamplers Ignore Details: Neighborhood Attention Transformers for Convex Upsampling, Nergis Tomen, Sander Gielisse (2022/2023)

- Making It Clear Using Vision Transformers in Multi-View Stereo on Specular and Transparent Materials, Nergis Tomen, Pieter Tolsma (2022/2023)

- Battle the Wind: Improving Flight Stability of a Flapping Wing Micro Air Vehicle under Wind Disturbance, Salua Hamaza, Sunyi Wang (2021/2022)

- ADAPT: A 3 Degrees of Freedom Reconfigurable Force Balanced Parallel Manipulator for Aerial Applications, Salua Hamaza, Kartik Suryavansi (2021/2022)

- Sensorless Impedance Control for Curved Surface Inspections Using the Omni-Drone Aerial Manipulator, Salua Hamaza, Hani Abu-Jurji (2021/2022)

- Embodied airflow sensing for improved in-gust flight of flapping wing MAVs, Salua Hamaza, Chenyao Wang (2021/2022)

- A Centralised Approach to Aerial Manipulation on Overhanging Surfaces, Salua Hamaza, Martijn Brummelhuis (2020/2021)